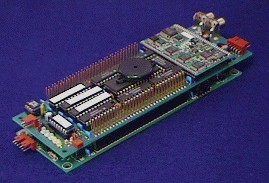

The Cognachrome Vision System is the most popular low-cost, vision-based tracking system used by researchers today. The system's extremely fast tracking output (60 Hertz) means it can be used in very high performance systems, while its small size (2.5"x6.25"x1.25", 8 oz.) means it can be embedded in small robots.

What makes the Cognachrome System unique is special hardware acceleration that enables it to track multiple objects in the visual field, distinguished by color, at a full 60 Hz frame rate, with a resolution of 200x250 pixels. The board can also perform more general-purpose processing on low-resolution 24-bit RGB frame grabs from 64x48 to 64x250 pixels in resolution.

Input to the Cognachrome Vision System is standard NTSC, allowing much flexibility in video input source, as well as making it very simple to see the real-time video image (just hook up a standard NTSC monitor). The board also outputs a 60 Hz NTSC image of the objects it is tracking, to help in debugging.

The Cognachrome Vision System allows for several modes of use:

Two Cognachrome Vision

Systems were integrated in the new version of the adaptive robot

catching project led by Prof. Jean-Jacques Slotine of MIT. The

project uses an advanced manipulator and fast-eye gimbals developed

under Dr. Kenneth Salisbury of the MIT AI lab.

Two Cognachrome Vision

Systems were integrated in the new version of the adaptive robot

catching project led by Prof. Jean-Jacques Slotine of MIT. The

project uses an advanced manipulator and fast-eye gimbals developed

under Dr. Kenneth Salisbury of the MIT AI lab.

Using two-dimensional stereo data from a pair of Cognachrome Vision Systems, they predict the three-dimensional trajectory of an object in flight, and control their fast robot arm (the Whole Arm Manipulator, or WAM) to intercept and grasp the object.

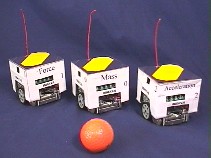

Newton Labs entered (and won) the first

International Micro Robot World

Cup Soccer Tournament (MIROSOT) held by KAIST in Taejon, Korea,

in November of 1996. We used the Cognachrome Vision System to

track our three robots (position and orientation), the soccer

ball, and the three opposing robots. The 60 Hertz update rate

from the vision system was instrumental in our success; other

teams obtained robot and ball position data in the 2 to 10 Hertz

range. Our win was covered by various news media, including CNN.

Newton Labs entered (and won) the first

International Micro Robot World

Cup Soccer Tournament (MIROSOT) held by KAIST in Taejon, Korea,

in November of 1996. We used the Cognachrome Vision System to

track our three robots (position and orientation), the soccer

ball, and the three opposing robots. The 60 Hertz update rate

from the vision system was instrumental in our success; other

teams obtained robot and ball position data in the 2 to 10 Hertz

range. Our win was covered by various news media, including CNN.

Because of the small size of the robots (each fit into a cube 7.5cm on a side), we opted for a single vision system connected to a camera facing down on the field, instead of a vision system in each robot. (In fact, the rules of the contest required colored markings on the top of the robot that encouraged this.)

Find out more about our robot soccer team

The University of Maryland

Space Systems Laboratory and the KISS Institute

for Practical Robotics have simulated autonomous spacecraft docking in a

neutral buoyancy tank for inclusion on the UMD Ranger space vehicle. Using a

composite target of three brightly-colored objects designed by David

P. Miller, the spacecraft knows its distance and orientation, and can servo

to arbitrary positions around the target.

The University of Maryland

Space Systems Laboratory and the KISS Institute

for Practical Robotics have simulated autonomous spacecraft docking in a

neutral buoyancy tank for inclusion on the UMD Ranger space vehicle. Using a

composite target of three brightly-colored objects designed by David

P. Miller, the spacecraft knows its distance and orientation, and can servo

to arbitrary positions around the target.

Find out more, including pictures and technical information.

Two robots tied for first place at the "Clean Up

the Tennis Court" contest, held at AAAI '96. One team was fielded by Newton

Research Labs. The other was led by Sebastian

Thrun of Carnegie Mellon University.

Two robots tied for first place at the "Clean Up

the Tennis Court" contest, held at AAAI '96. One team was fielded by Newton

Research Labs. The other was led by Sebastian

Thrun of Carnegie Mellon University.

Both winning robots used the Cognachrome Vision System. The Cognachrome system provided fast tracking information on the positions of tennis balls, as well as the positions of the randomly-moving, self-powered "squiggle" balls.

Our robot is shown in the Scientific American Frontiers special entitled "Robots Alive!" with host Alan Alda.

We describe more about our winning entry in the article entitled "Dynamic Object Capture Using Fast Vision Tracking", published in the Spring 1997 issue of AI Magazine. For more information, please read the online copy of this paper.

Performance artist and roboticist Barry Werger creates performance robotics pieces using Pioneer mobile robots equipped with the Cognachrome Vision System. By providing the robot and human players with appropriately colored tags, the robots can interact with each other, and humans, at a distance in a theatrically interesting way.

Maja Mataric, Barry Werger, Dani Goldberg, and Francois Michaud at the Volen Center for Complex Systems at Brandeis University study group behavior and social interaction of robots.

Barry Werger says:

"I have combined these two [vision-based long-range obstacle avoidance and vision-based following of intermittently blocked objects] to address some of the problems we have in our mixed robot environment... that is, the Pioneers are faster and bigger than our other, more fragile robots; the long range avoidance allows them to keep a safe distance from other robots, even in fairly dynamic environments, when following a dynamic target. The vision allows us to make these distinctions very easily, which the sonar does not."